Should you gamify your ESM / EMA study?

TL;DR

Gamifying experience sampling (ESM) / ecological momentary assessment (EMA) studies with real-time rewards could boost response rates and speed, but at the cost of slightly lower data quality. Our article shows why balancing quantity and quality matters, and explains how researchers can use gamification wisely.

If you’ve ever run an experience sampling (ESM) / ecological momentary assessment (EMA) study, you know the struggle: participants start strong, but over time response rates slip. Skipped surveys mean missing data, and missing data threathens obtaining valid and robust answers to your research questions.

So here’s the question: Could adding some fun, like game rewards, help people stick with the completion of the momentary surveys?

Gamification is everywhere: from fitness apps that give you badges for important health milestones to language apps that reward interaction streaks. But in science, where the quality of data is everything, do game rewards really help or could they backfire?

That’s what our m-Path team tested in a real-life experiment.

The experiment at a glance

We recruited 193 participants for a 10-day ESM / EMA study. Each participant got 10 notifications per day, using m-Path. After each survey, they either:

- Control group: Received nothing extra.

- Gamification group: Immediately received in-app virtual coins plus fireworks 🎉. They could save these coins or spend them on fun facts (extrinsic rewards) or personalized graphs (intrinsic rewards).

We also added game elements like streak bonuses 💰, a coin wallet 👛, and a reward banner 🏆. In short, we built a mini-economy inside the app.

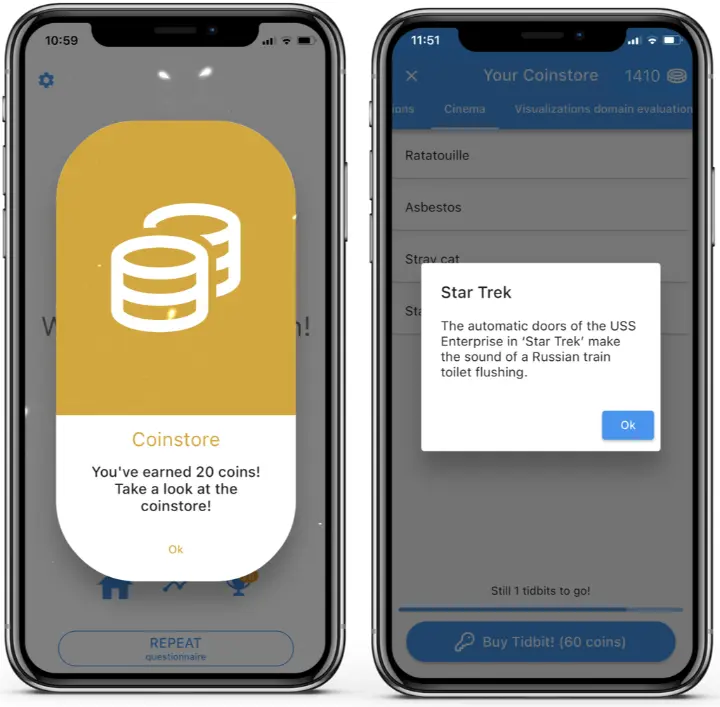

m-Path screenhots that visualize the immediate coin award and an unlocked fun fact.

What we found

1. More responses, but only if people used the store.

Participants who actually spent their coins completed more surveys. Just giving coins wasn’t enough, the real motivator was exchanging them for fun facts or personal graphs.

2. Faster responses.

Those same participants opened surveys more quickly. They didn’t let notifications linger, but acted quicker. This produced responses that were more likely to be ecologically valid.

3. A trade-off in quality.

However, here’s the twist: gamified participants were paradoxically slightly less reliable in their answers. When an item was repeated in the same survey (a test-retest reliability procedure), their ratings showed more inconsistency. This suggests that while gamification nudged them to respond, they may have done so with less attention or care.

4. No speed boost in completion.

Survey completion times stayed the same across conditions. So gamification didn’t make people rush through the actual questions.

Why this matters for your research

At first glance, gamification looks like a win: higher response rates, faster engagement that really captures people "in the moment". But if it comes with lower reliability, researchers need to weigh the trade-off carefully.

- Good for engagement: Gamification may help prevent drop-off, especially in long or intensive studies.

- Risk for data quality: If participants focus too much on rewards rather than reflection, the answers may be noisier.

In other words: actively pursuing more isn’t always better. For high-stakes research questions, quantity cannot come at the cost of quality.

Practical take-aways

So how can you use gamification without undermining the quality of your data? We see a few ways forward.

- Combine incentive strategies: Use gamification alongside traditional incentives (e.g., fair compensation, clear communication and frequent check-ins, fostering a good participant-researcher alliance, highlighting participants' contribution to science).

- Design for balance: Don’t let the game overshadow the survey. Rewards should support attention, not distract from it.

- Choose reward type carefully: We found that both fun facts and personal graphs worked equally well, on average. However, think about what fits your study population (e.g., patients may see greater value in personalized ESM / EMA feedback).

- Monitor reliability: Include checks (like repeated items) to assess data reliability and to catch careless or inattentive responding.

A step toward smarter ESM incentives

Our experiment shows that momentary incentives in EMA / ESM aren’t one-size-fits-all. Financial rewards boost compliance but may be impractical or unethical in some contexts. Gamification is flexible and cost-effective, but it’s not risk-free.

That’s why we’ve made our gamification building blocks openly available in m-Path. Researchers can now test, adapt, and refine these features in their own studies.

Check out our dedicated manual page to learn how to add default or custom awards to gamify your ESM / EMA study.

👉 Explore the full paper here: Computers in Human Behavior, 2024.