What makes a good ESM / EMA survey item?

TL;DR

Until now, there was no shared way to judge whether a survey item in experience sampling (ESM) / ecological momentary assessment (EMA) research was actually good. The new ESM-Q fills that gap: a consensus-based checklist that defines what “quality” means for ESM / EMA questions. It offers 10 core and 15 supplementary criteria (covering everything from simple wording to time-frame clarity) to help researchers design, select, and report better daily-life measures.

When you've designed an experience sampling (ESM) / ecological momentary assessment (EMA) study, you’ve probably asked yourself at some point: “Is this the right item to capture what I want to measure?”

For years, researchers have built their own daily-life questionnaires from scratch, sometimes adapting trait scales, sometimes writing ad hoc items on the fly. The result? Hundreds of subtly different ways to ask “How do you feel right now?” and very little agreement on which ones are (more) reliable or valid.

That’s the problem the open-science ESM Item Repository initiative set out to fix.

A long-overdue step for ESM / EMA measurement

In a recent publication led by our former KU Leuven colleagues, 31 researchers introduced the first consensus-based quality assessment tool for ESM / EMA items: The ESM-Q.

Their motivation was simple but pressing: despite the explosion of daily-life research, our field still lacks shared standards for item quality. Recent reviews paint a concerning picture:

- Only about 30% of ESM / EMA studies reuse items that have been used in other ESM / EMA studies. The remaining majority either borrow “momentary” items from retrospective questionnaires (often not designed for real-time assessment) or create new items on the spot without prior validation.

- Crucially, most ESM / EMA papers do not report any psychometric information about the reliability or validity of their items.

- This absence of transparency allows low-quality items to spread across ESM / EMA studies, undermining the cumulative strength of our field.

The problem is obvious: without insight into item quality, even the most elegant ESM / EMA designs may rest on shaky ground.

What is the ESM-Q?

The ESM-Q is the outcome of a four-round Delphi study involving 42 experts across more than 20 institutions worldwide. Through iterative rounds of proposing, rating, and discussing potential item-quality criteria, the group reached consensus on 25 key principles.

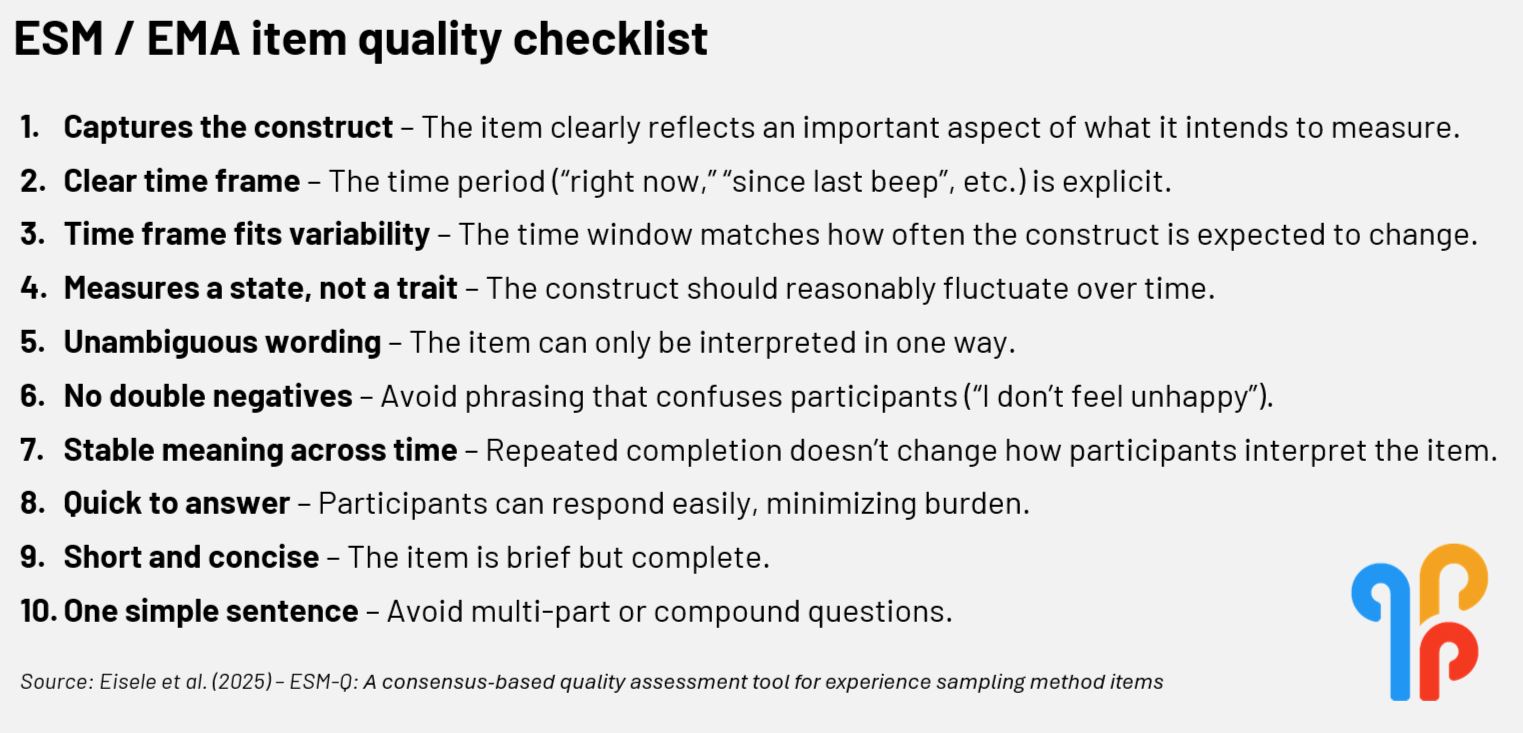

1. The 10 core criteria

These apply to any ESM / EMA item, no matter the study. The figure below, summarizes them nicely:

The 10 core criteria to design an appropriate ESM / EMA survey item, following the ESM-Q consensus.

2. The 15 supplementary criteria

These come into play when extra information is available (e.g., about the population, sampling scheme, or full questionnaire). They include aspects like appropriate anchors, population-specific wording, and consistency within multi-item measures. You can review them here.

A few of our favorite criteria (and why they resonate)

“The participant can answer the item quickly.”

ESM / EMA lives and dies by compliance. If your questions take too long, people stop answering.

“The meaning of the item is unlikely to change over the course of the study.”

Repeated assessments can themselves alter interpretation: an elegant nod to reconceptualization, often discussed but rarely operationalized.

“The time-frame corresponds to the expected variability in the construct.”

A subtle yet crucial reminder: not every construct changes by the hour, and your sampling frequency should respect that.

Why this matters for your research

1. It guides the definition of “item quality” in ESM / EMA research.

For the first time, researchers have a common language to discuss how good an ESM / EMA question is. The checklist can help you design appropriate items.

2. It prevents reinventing the wheel.

Instead of writing yet another “How happy are you right now?” item, you can now select and evaluate existing items using shared standards, many already available in the ESM Item Repository.

3. It supports transparency.

By referencing ESM-Q criteria in methods sections or preregistrations, authors make it clear that their items meet field-wide expectations.

4. It lays out a research agenda for the broader ESM / EMA community.

The Delphi reflections didn’t just yield criteria; they also surfaced open questions about temporal framing, comprehension, and measurement reactivity that future studies can address.

A small step toward cumulative science

The ESM-Q complements ongoing open-science efforts such as the ESM Item Repository, where researchers can deposit, share, and compare items. The long-term vision is a transparent, cumulative database where every item’s quality and usage history are traceable.

For m-Path users and the broader ESM community, this means easier access to validated, high-quality item sets that can be deployed directly within digital platforms.

Imagine designing a study in m-Path’s dashboard and instantly checking:

✅ Time-frame clear?

✅ Population-appropriate wording?

✅ No double negatives?

✅ ...

That’s the spirit of ESM-Q: bringing methodological rigor into everyday research practice.

The bigger picture

High-frequency data collection is only as strong as the questions we ask. The ESM-Q reminds us that measurement quality deserves as much attention as fancy design features or modeling techniques.

The authors invite the community to test, refine, and expand the ESM-Q. That means:

- Applying it to your current item set.

- Sharing feedback on ambiguous or missing criteria.

- Contributing new evidence on which criteria predict better data quality.

At m-Path, we’ll be watching closely, and exploring how such tools can be integrated into the design workflow researchers already use.

If you design a new ESM / EMA study, download the ESM-Q template from OSF and try rating a few of your own items. You might be surprised which questions hold up (and which don’t 🙃).

👉 Explore the full paper here: Behavior Research Methods, 2025.